upholding-research-integrity-and-publishing-ethics

July 12, 2017

Why is what we do in peer review important?

What we do in peer review and research integrity continues to earn research the place it deserves “as the bedrock of public policy and the solutions to our most urgent problems, from protecting public health to mitigating climate change.” Liz Ferguson, VP of Publishing Development at Wiley, commented at the Wiley Executive Seminar in London in April 2017 that, “Peer review is the bottleneck, or pain point, we hear most about. It’s also the single most valuable part of the service journals and publishers provide in a digital age.” We certainly hear about those pain points when peer review and the publishing process go wrong.

What happens when peer review and quality assurance goes wrong?

Retractions are often viewed as what happens when peer review and quality assurance goes wrong. They’re published when research is found to be misleading, unsafe, or fraudulent. But it is a bit more complicated than that: Perversely, retractions can also show research and editorial processes when they are working well, stewarding or curating the inherently uncertain world of research.

The uncertain world: Retractions as good practice

Retractions that signal good academic practice are not hard to find. My current favorite is the story reported by Nature of Pamela Ronald, crop scientist at University of California, Davis. When Ronald and her colleagues found they couldn’t reproduce their work on disease resistance in rice (more on reproducibility later) they went to extraordinary lengths to understand what had gone wrong, to communicate this with the editors at Science who had published their work, and to retract the paper. This is great academic practice, even though the outcome – a retraction – is not likely to have been one that Ronald and colleagues anticipated or that they were overjoyed with. Richard Mann’s story (about computational biology and shrimp) also makes good reading. The lesson from both Ronald and Mann is that retractions for honest error are good practice, and not to be feared.

Fake peer reviewers

Retractions also, of course, signal poor academic practice. The recent retraction of 107 cancer papers is arguably a case of identity fraud. These 107 papers were retracted after the publisher discovered that their peer review process had been compromised by fake peer reviewers. It's not clear that the researchers involved did this wittingly. It may have been the fault of a third-party they paid to help with language editing and submission, to help them get their work published. Spotting fake peer reviewers is hard, and this problem is not new: A little over two years ago, a publisher retracted 64 papers from 10 journals for the same reason, and the cheating gets more sophisticated.

Research misconduct

And then there are retractions for research fraud. Retraction Watch publishes a “leaderboard” featuring the most prominent offenders. Members of this gallery include researchers who committed misconduct by making up their research, with Yoshitaka Fujii at the top on 183 retractions. It has been reported that Fujii simply made up much of the data that he published throughout his career. A sophisticated investigation initiated by anesthesiology journal editors eventually resulted in Fujii’s 183 retractions. The tool used in that investigation, created by John Carlisle (an anesthetist working in the UK), has recently been used again to look at research published in more than 5000 articles across 8 journals. Carlisle’s method, and others like it, have potential for use prior to publication and as part of our editorial workflows (a little more on tools below).

Reproducibility: Research is a human endeavor

Perhaps a more pervasive problem than outright fraud is the challenge of reproducibility. While some of the headlines are extreme, there is evidence that reproducing published research isn’t always as straightforward as it should be. Natureand others refer to it as a “crisis.”This is, perhaps, an unfortunate choice of language. It is true that not everything that's peer-reviewed and published is “right,” but perhaps this should not be a surprise: What editors, journals, and publishers aim to publish is the best version of what we know right now. Science is a human endeavor. Scientific research is a messy business. It’s important we recognize that published research contains uncertainty and sometimes errors. In turn, this means that to do our job well as editors and publishers of journals, we need editorial processes, technology, and a focus on “quality” that can cope with this messiness and ambiguity. These ideas are central to the work of COPE, the Committee on Publication Ethics. COPE helps us to manage that uncertainty with clear processes – in the form of flowcharts – for the issues we’re presented with, with case histories to show us how others have managed similar situations, and with much more. [Disclosure: Chris Graf volunteers as Co-Chair at COPE].

Quality: “Integrity” first, and then “impact”

The concepts “integrity” (by which we mean reliability and trustworthiness thus, reproducibility) and “ethics” (by which we mean appropriate conduct, i.e. no fraud or misconduct like fake peer reviewers or data fabrication), and then “impact”, describe what quality should mean in research publishing.

It’s arguable that all journals could view “integrity” in a similar way. And it’s acceptable that different journals will view “impact” in a different way: Not every journal can be Nature or Science and neither should they want to be. “Integrity”− a more nuanced and meaningful concept perhaps than “sound science” – should be a benchmark for editorial quality in academic publishing. Only after assessing “integrity” comes an assessment of “impact.”

Integrity: Top areas for focus

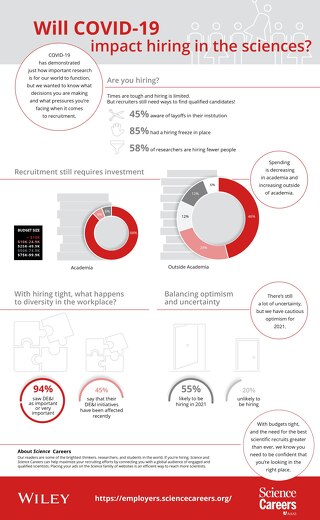

An informal survey of Wiley editorial staff in May 2017 revealed the publishing ethics and research integrity issues that are “top-of-mind”. The figure here shows the top 5 concerns expressed by our colleagues.

It’s not just Wiley employees who highlight many of these areas for focus. The STM Association creates a summary of tech trends and publishes it annually. This year, looking ahead to 2021, the STM Association’s focus is “trust and integrity” defined in 4 segments:

- Accuracy and curation (with, for example, ideas in reproducibility, integrity checks, and editorial process innovation)

- Smart services (with, for example, ideas in automating integrity checks and reporting guidelines, with feedback for authors to help them report their work as transparently as possible)

- Serving individual researchers (with, for example, ideas in tracking individuals and their contributions, such as using ORCIDs and RRIDs)

- Collaboration and sharing (with, for example, ideas in the “open” space, like open science in the form of lab books and peer review).

How we peer review and publish research at Wiley is built on the great work of our editors and publishing teams. This work is always a collaboration with researchers and academic communities around the world who write, peer review, and then build upon the work we publish with their own research. Looking wider, funders and institutions shape how we work, when they decide how to fund and reward research and academic work, and when they decide how to support researchers with training and mentorship.

I often quote Ginny Barbour, the previous Chair of COPE, who said back in 2015 that “We need a culture of responsibility for the integrity of the literature… it’s not just the job of editors.” In my words this means that, when it comes to research integrity and publishing ethics, this is a road we must travel together.

How will we build that culture of responsibility?

Traveling this road together will require careful organization, and small, evenly measured steps. Some parts of the road we’ll run along, other parts we’ll need to walk. Our plan at Wiley, to help make our ambitions real, includes:

- Continued work with our virtual team of editorial, content management, legal, and communications colleagues to reactively manage concerns raised and requests for retractions, to collect and record data on trends, to manage investigations proactively where that is warranted, and to act on the insights we’ll glean to enhance how we support integrity in peer review and research publishing.

- Collaborations with our colleagues around the world, to make their expertise available for others to benefit from, in the form of simple guidance and advice. For example, Alexandra Cury published 5 tips to prevent peer reviewer fraud in May 2017, reflecting and sharing onwards the expertise she’s gained from her work with editors as a journal manager.

- Editorial initiatives and experiments designed to improve transparency and openness, and thus reproducibility. We are pleased to be an organizational signatory to the TOP guidelines. We publish several journals – the European Journal of Neuroscience in particular – that are leading proponents for “two stage” peer review adopted in the Registered Reports model. We will continue to encourage and support journals that experiment with this model, and others like it.

- New experiments and investments in people and technology that support editors, peer reviewers, and research and academic authors. For example, quite independently, the journal Addiction (published by Wiley on behalf of the Society for the Study of Addiction) is running a pilot with Penelope Research. Addiction uses Penelope Research technology to automatically check that the reporting standards required in its instructions for authors have been followed by its authors, and to use that automated feedback so that Addiction’s editors can better support authors before they publish.

What do you think? How might we collaborate? We’d love to know what’s top of your mind, so that we can help address it together. Please, let us know via publication.ethics@wiley.com. And thank you.

Note: Several of the themes discussed here have been covered in COPE’s Digest newsletter, using many of the same words..